There are few aspects of an organization’s structure which has grown more valuable in the advent of the Information Age than a company’s data center. A data center is any physical or virtual infrastructure which serves as a home to a company’s information technology resources, including networking, server and computer systems. Currently, the world’s largest single data center campus is operated by data ecosystem provider Switch; that company’s Las Vegas Digital Exchange Campus hosts 1.51 million square feet of operating data center facility at the time of this writing. This is soon to be dwarfed, however, by a 6.3 million square foot facility that will open this year in Langfang, China. Today’s distributed computing technologies have resulted in a more abstract concept for the data center, which could include dispersed networked resources at a remote location.

There are few aspects of an organization’s structure which has grown more valuable in the advent of the Information Age than a company’s data center. A data center is any physical or virtual infrastructure which serves as a home to a company’s information technology resources, including networking, server and computer systems. Currently, the world’s largest single data center campus is operated by data ecosystem provider Switch; that company’s Las Vegas Digital Exchange Campus hosts 1.51 million square feet of operating data center facility at the time of this writing. This is soon to be dwarfed, however, by a 6.3 million square foot facility that will open this year in Langfang, China. Today’s distributed computing technologies have resulted in a more abstract concept for the data center, which could include dispersed networked resources at a remote location.

By August 2007, 1.5 percent of all electricity consumed in the United States was being swallowed up by data centers, according to a Lawrence Berkeley National Laboratory report to Congress at that time. This electricity is necessary not only to power the data center’s computing resources but also other important system components, like cooling systems that maintain a data center’s temperature within an optimal range of operation. Data centers can run at temperatures greater than 90°F, but excessive processing loads or even hot weather can threaten even higher temperatures, increasing the risk of damaging data center components or causing server crash.

Any reader will know that water and high loads of electricity are a poor mix, at least where human safety is concerned, but multinational tech firm Microsoft Corporation (NASDAQ:MSFT) is trying to take data center activities into the great expanse that famed science fiction writer Jules Verne referred to as “the Living Infinite”: the sea. Since late 2014, Microsoft has been working on a subsea data center operation known as Project Natick which seeks to increase rapid speeds of data provision for nearly half the world’s population which lives within 200 kilometers (about 125 miles) of an ocean coastline. A recent 105-day Project Natick trial for a steel capsule containing data center hardware stationed off the Pacific Ocean coast near San Luis Obispo, CA, completed without the capsule springing any leaks. The subsea data center operations will be cooled by the surrounding water and is designed with turbines or tidal energy systems, which would further reduce electricity costs, have been considered. Although the data center’s aquatic environment is certainly a novel concept, the use of combined heat and power (CHP) plants to provide a cheap, dedicated power supply and temperature controls is being considered more often in recent months.

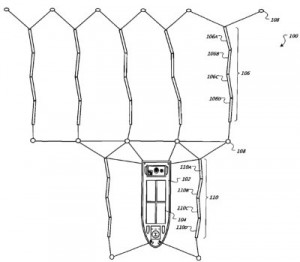

This is not the first time that a major tech power has looked into developing data centers for aquatic environments. Near the end of 2013, British newspaper The Guardian reported that Google was likely building floating data centers off the coast of Maine and California using technology that it had patented back in 2009. That year, Google was issued U.S. Patent No. 7255207, titled Water-Based Data Center, protecting a data center with computing units, sea-based electrical generators for creating electricity from tidal changes and sea-water cooling units to regulate its temperature. The use of floating or water-based data centers could also create an interesting regulatory loophole for tech companies looking to pull data operations into international waters, where it is tougher to regulate than if those operations were within a country’s borders.

This is not the first time that a major tech power has looked into developing data centers for aquatic environments. Near the end of 2013, British newspaper The Guardian reported that Google was likely building floating data centers off the coast of Maine and California using technology that it had patented back in 2009. That year, Google was issued U.S. Patent No. 7255207, titled Water-Based Data Center, protecting a data center with computing units, sea-based electrical generators for creating electricity from tidal changes and sea-water cooling units to regulate its temperature. The use of floating or water-based data centers could also create an interesting regulatory loophole for tech companies looking to pull data operations into international waters, where it is tougher to regulate than if those operations were within a country’s borders.

Globally, the market for data center hardware was increasing through the third quarter of 2015 and the mix of sector rivals includes some major tech players, according to market research firm Technology Business Research. Overall, this sector reached $21.7 billion in revenues during 2015’s third quarter, bolstered by business model moves among tech giants IBM (NYSE:IBM) and Cisco Systems (NASDAQ:CSCO).

[Varsity-1]

IBM began a significant shift in its data center operations when it announced in January 2014 that it would commit $1.2 billion towards increasing its global cloud data center presence largely in support of SoftLayer, a cloud infrastructure provider and IBM subsidiary. By June 2015, IBM was operating 40 cloud data centers across the world, including 24 data centers dedicated to SoftLayer. As we’ve reported in the past here on IPWatchdog, the cloud has been very profitable in recent years for IBM and its revenues from this sector have been helping to prop up its data center expenditures. IBM has been struggling with declining quarter-over-quarter for the past few years but there are some signs of a change in the tide, such as the first quarter of reported growth for the company’s Power server systems in four years during last year’s fourth quarter. In the beginning of February, IBM announced the investment of an additional $5 million to expand its data center operations in Bogotá, Columbia.

Cisco is very aware of the importance of maintaining a solid position in the data center market as it expects that global data center Internet protocol (IP) traffic will grow by 31 percent per year within the next five years, with the growth of cloud and mobile computing providing the impetus for this increase. However, Cisco’s most recent earnings report showed that the tech company saw a 3 percent year-over-year decline in its data center business, dropping its sales to $822 million in that sector. In early February, Cisco was also rattled by a recall which it ordered on ruggedized Ethernet switch products sold by the company which were found to be susceptible to an electrical fault which could lead to component failure or electrical fire.

Qualcomm (NASDAQ:QCOM) is also making a push into the data center sector and it seems to be coming at the expense of rival tech firm Intel (NASDAQ:INTC). There have been reports that Alphabet (NASDAQ:GOOG) is seriously considering switching from Intel’s data center server processors to products developed by Qualcomm. In January, both Intel and Qualcomm announced separate partnerships with Chinese entities to create data center solutions in that country which utilize processors developed by either tech firm. This challenge is coming in a sector over which Intel held 99 percent control as recently as last October.

Many innovative solutions in data center implementation and operation are coming from firms other than those residing in the tech world’s stratosphere. Global communications firm CenturyLink (NYSE:CTL) took home an award in data center operational excellence in January, due in large part to the company being the world’s first to commit to certifying its data centers for management and operations (M&O) standards developed by data center research and consulting organization Uptime Institute. Uptime’s M&O standard guidelines have been developed to reduce human error at data centers, the leading cause of downtime at data centers according to the organization’s own research.

Increasing network virtualization has been transforming the very work environments experienced at many telecommunications companies which are expanding their data center operations. Many telcos, such as AT&T (NYSE:T) and Verizon Communications (NYSE:VZ), are transforming their central office facilities into data centers housing commodity servers and switches which run telecommunication software programs, as opposed to the specialized hardware equipment of yore. AT&T has reportedly already converted 70 of its central office facilities around the United States into what the company calls “integrated cloud nodes.”

In recent weeks, the U.S. Patent and Trademark Office has issued dozens of patents for data center technologies and a noticeable amount of them have been assigned to IBM, such as is the case with U.S. Patent No. 9250636, entitled Coolant and Ambient Temperature Control for Chillerless Liquid Cooled Data Centers. It protects a cooling control method which involves measuring the temperature of air provided to a plurality of nodes by an air-to-liquid heat exchanger, measuring the temperature of a component of the plurality of nodes to find a maximum component temperature across all nodes, comparing the maximum component temperature to a first and second component threshold and comparing the air temperature to a first and second air threshold, and then controlling a proportion of coolant flow and a coolant flow rate to the air-to-liquid heat exchanger and to the plurality of nodes based on the comparison. The cooling control method is designed to overcome situations where the temperature difference in coolant entering the air-to-liquid heat exchanger and air leaving the exchanger can become a limiting factor on the rate of cooling.

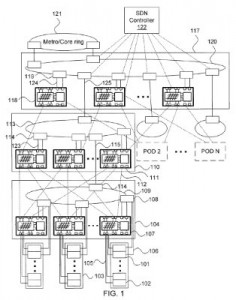

The need for high resource efficiency due to the explosive growth of cloud-centric applications has pushed NEC Laboratories America of Princeton, NJ,  to develop the technology protected by U.S. Patent No. 9247325, titled Hybrid Electro-Optical Distributed Software-Defined Data Center Architecture. It discloses a hybrid electro-optical data center system having a bottom tier with multiple bottom tier instances, each comprised of one or more racks, an electro-optical switch corresponding to each rack and a bottom tier optical loop providing optical connectivity between the electro-optical switches of each bottom tier instance; the system also has a top tier having multiple electro-optical switches, each electrically connected to electro-optical switches in a respective bottom tier instance, a first tier optical loop providing optical connectivity between the electro-optical switches of the first tier and optical add/drop modules providing optical connectivity between optical loops on the top and bottom tiers. This innovation is designed to statistically multiplex traffic onto a single optical channel in a hybrid data center, which use interconnecting top of rack (TOR) switches and micro-electromechanical switches (MEMS), for the sharing of optical bandwidth among switch connections.

to develop the technology protected by U.S. Patent No. 9247325, titled Hybrid Electro-Optical Distributed Software-Defined Data Center Architecture. It discloses a hybrid electro-optical data center system having a bottom tier with multiple bottom tier instances, each comprised of one or more racks, an electro-optical switch corresponding to each rack and a bottom tier optical loop providing optical connectivity between the electro-optical switches of each bottom tier instance; the system also has a top tier having multiple electro-optical switches, each electrically connected to electro-optical switches in a respective bottom tier instance, a first tier optical loop providing optical connectivity between the electro-optical switches of the first tier and optical add/drop modules providing optical connectivity between optical loops on the top and bottom tiers. This innovation is designed to statistically multiplex traffic onto a single optical channel in a hybrid data center, which use interconnecting top of rack (TOR) switches and micro-electromechanical switches (MEMS), for the sharing of optical bandwidth among switch connections.

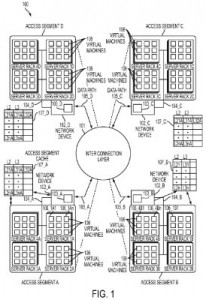

Data center  innovation can also be found beyond the borders of the United States, as is evidenced by U.S. Patent No. 9253141, entitled Scaling Address Resolution for Massive Data Centers and issued to an Israeli subsidiary of the Bermuda semiconductor producer Marvell Technology Group (NASDAQ:MRVL). It claims a network device disposed at an interface between a first access segment and an interconnecting layer of a data center and having an address resolution processor that receives an address request, specifying both a source layer address and a layer address of the target virtual machine, addressed to virtual machines in a broadcast domain, transmits a local message over the first access segment requesting the respective layer address of a virtual machine which has a respective layer address corresponding to the specified layer address, and then transmits a reply message to the specified source layer address which provides the layer address of the network device and the layer address of the virtual machine which has the specified layer address. This innovation for massive data centers, which may serve hundreds of thousands of virtual machines, helps to address issues in a significant scaling up of broadcast domains when virtual machines migrate to a different access segment.

innovation can also be found beyond the borders of the United States, as is evidenced by U.S. Patent No. 9253141, entitled Scaling Address Resolution for Massive Data Centers and issued to an Israeli subsidiary of the Bermuda semiconductor producer Marvell Technology Group (NASDAQ:MRVL). It claims a network device disposed at an interface between a first access segment and an interconnecting layer of a data center and having an address resolution processor that receives an address request, specifying both a source layer address and a layer address of the target virtual machine, addressed to virtual machines in a broadcast domain, transmits a local message over the first access segment requesting the respective layer address of a virtual machine which has a respective layer address corresponding to the specified layer address, and then transmits a reply message to the specified source layer address which provides the layer address of the network device and the layer address of the virtual machine which has the specified layer address. This innovation for massive data centers, which may serve hundreds of thousands of virtual machines, helps to address issues in a significant scaling up of broadcast domains when virtual machines migrate to a different access segment.

![[IPWatchdog Logo]](https://ipwatchdog.com/wp-content/themes/IPWatchdog%20-%202023/assets/images/temp/logo-small@2x.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/Artificial-Intelligence-2024-REPLAY-sidebar-700x500-corrected.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/Patent-Litigation-Masters-2024-sidebar-700x500-1.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/WEBINAR-336-x-280-px.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/2021-Patent-Practice-on-Demand-recorded-Feb-2021-336-x-280.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/Ad-4-The-Invent-Patent-System™.png)

Join the Discussion

No comments yet.