“The PTO we will work to provide more concrete tests – to the extent possible given Supreme Court precedent,” Director Iancu said speaking about patent eligibility. “This is an area we must all address, and one on which we will continue to engage this Committee…”

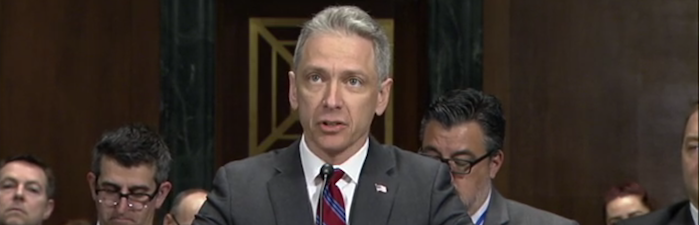

This morning USPTO Director Andrei Iancu appeared before the Senate Judiciary Committee to testify at an oversight hearing.

Senator Chris Coons (D-DE) lead off for the Democrats after Chairman Chuck Grassley (R-IA) made a brief opening statement. Coons rather quickly moved his remarks toward the recent report from the U.S. Chamber of Commerce, which now ranks the U.S. patent system 12th in the world. “One cause is the impact of the new post grant proceedings before the Patent Trial and Appeal Board,” Coons said. “The current review system is systematically biased against patent owners based on statistics from its first five years.”

Coons would go on to succinctly explain at the very real threat posed by the PTAB — with the omnipresent threat of an overly active body that is aggressively destroying patent rights investors are becoming increasingly reluctant to invest in early stage technologies and start-up companies.

With respect to the drag the PTAB is having on the patent system and investment, Coons said.

This dynamic has left investors with a growing impression that putting money behind innovative ideas backed only by a patent may not be a wise investment strategy, to the detriment of innovators and ultimately to the entire American economy. Another critical problem facing our patent system is the lack of clarity on which invention are and are not eligible for patent protection recent supreme Court decisions have called into question whether patents are appropriate to protect innovations in some of the most dynamic areas of our economy; software developments and medical diagnostics. While the impact may be noticeable to patent practitioners and inventors, if corrective action isn’t taken, in years to come the broader public may be asking why all the newest and most advanced innovations and products in these areas are created somewhere other than the United States.

Director Iancu’s prepared remarks were entered into the record, and he was offered some time to make a brief statement. During this time Iancu specifically spoke about several important issues, including PTAB proceedings and patent eligibility.

PTAB Proceedings

With respect to PTAB proceedings, Director Iancu told the Committee:

We are reviewing [PTAB proceedings] carefully to ensure that the Agency’s approach to these critical proceedings is consistent with the intent of the AIA and the overall goal of ensuring predictable, high quality patent rights. We are currently studying, among other things, the institution decision, claim construction, the amendment process, composition of judging panels, the conduct of hearings and the variety of standard operating procedures.

While this is largely a summary of his written testimony, it does go further. In his written testimony Director Iancu wrote that the Office is studying issues that “include the institution decision, claim construction, the amendment process, and the conduct of hearings.” Adding the composition of judging panels to the list could well signal that Director Iancu is specifically considering the possibility of separating the institution decision from the merits decision, which critics of the post grant process have urged. Similarly, reviewing standard operating procedures could mean many things, but suggests that there is some appreciate for structural issues and a lack of transparency that has dogged a stubborn PTAB that at times almost seems to willfully have a tin ear.

Patent Eligibility

“The patent eligibility legal landscape is also a critical area we are exploring,” Director Iancu told the Senate Judiciary Committee. “We are considering ways to increase the certainty and predictability of the eligibility analysis.”

Director Iancu also went on to acknowledge that the statute in question, 35 U.S.C. 101, has not changed in decades but that the Supreme Court has recently infused uncertainty, which must be addressed. Iancu said:

While the statutory language of Section 101 has not been substantially changed for decades, in recent years the Supreme Court has issued decisions on patentable subject matter that have introduced a degree of uncertainty into this area of law. At the PTO we will work to provide more concrete tests – to the extent possible given Supreme Court precedent – that guide examiners and the public toward finding the appropriate lines to draw with respect to eligible subject matter. This is an area we must all address, and one on which we will continue to engage this Committee, stakeholders and the public, and should Congress decide to explore a legislative adjustment of Section 101 we would work with this Committee and with stakeholders to explore viable options.

It sounds as if Director Iancu is laying the foundation for legislative changes to 101. What a change in tone compared with the previous Administration.

![[IPWatchdog Logo]](https://ipwatchdog.com/wp-content/themes/IPWatchdog%20-%202023/assets/images/temp/logo-small@2x.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2024/04/Patent-Litigation-Masters-2024-sidebar-early-bird-ends-Apr-21-last-chance-700x500-1.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/WEBINAR-336-x-280-px.png)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/2021-Patent-Practice-on-Demand-recorded-Feb-2021-336-x-280.jpg)

![[Advertisement]](https://ipwatchdog.com/wp-content/uploads/2021/12/Ad-4-The-Invent-Patent-System™.png)

Join the Discussion

19 comments so far.

B

April 19, 2018 03:34 pmFYI, Bob Bahr released new guidelines today re Berkheimer, and they are actually useful w.r.t. evidence. Note that the CAFC is presently considering a rehearing en banc, and grant/denial should be in the next two weeks tops.

Too bad Bahr couldn’t bother to read Alice and Mayo, both of which were decided based on intrinsic and/or extrinsic evidence.

B

April 19, 2018 12:11 pm“This is precisely why I advance my legal notion of Void for Vagueness.”

Anon – you win the internet today. FWIW, I think the SCOTUS should have done a bit of research on the 1952 Patent Act and Giles Rich’s law review articles on it before penning Alice Corp. That said, I think the CAFC should have paid closer attention to what Alice and Mayo actually hold. Now we get to the USPTO’s internal guidelines, which are the opposite of useful.

The only way to understand some decisions, such as Electric Power Group, is to read the briefs, listen to the CAFC oral argument, and then parse the claims carefully. There’s an argument to be made that the CAFC had a correct outcome – but the written decision is pathetic, misleading and legally incorrect.

BTW, Gene – I do have an article coming

Anon

April 19, 2018 11:32 amGene @ 9:

“that reconciles all Supreme Court and Federal Circuit decisions.”

This is precisely why I advance my legal notion of Void for Vagueness.

Even (if only for argument’s sake) we allow the Supreme Court to violate the separation of powers doctrine and write patent law (with their “exceptions”), that law that they write must – like any (proper) legislatively written law – not succumb to being void for vagueness. As Justice Gorsuch recently notes (on non-patent law matters), a law that falls to being “Void for Vagueness” is a law that violates a first principle of due process in that the law cannot be confidently applied a priori.

And that is exactly what we have in how the Supreme Court’s “gift” plays out – NOT ONLY within the cases of the Supreme Court itself, but in the “ping-pong” effects that we continue to see in the lower courts.

All this reverberates in history – Congress, upset with an anti-patent Supreme Court of the 1930s and 1940s (with the self-described label of “the only valid patent is one that has not yet appeared before us”) removed the prior allocated authority to the judicial branch to set the meaning of the term “invention” through the power of common law evolution. They did so in the Act of 1952 providing in place of “invention,” the new requirement of non-obviousness.

The Court simply was not setting that definition – and instead, through such terms as “gist of the invention” (and myriad [pun intended] other “labels” has set themselves up as a type of “final arbiter” to decide whether something “deserved” patent protection (a la, whether that something had the “Flash of Genius” or not).

ALL of this has been written by the judicial member that perhaps had the best appreciation of what Congress did in the Act of 1952 (in part, because he helped write that Act): Judge Rich.

Since the Supreme Court refuses to accept what Congress has done, and since any new “legislative correction” may eventually suffer the same fate as being twisted (as an interpretation) by the Court, I have often advanced that the only true long term solution is to remove the (wax-addicted) Court from the non-Supreme-Court-original-jurisdiction of patent appeals, with Congress exercising its Constitutional power of jurisdiction stripping. I add the caveat that Marbury can easily be preserved with Congress acting to set up a new Article III body for review – a body not tainted as is the current CAFC.

B

April 19, 2018 09:03 am“DDR Holdings seems hopelessly out of date on its understanding of computer science and software engineering.”

DDR Holdings is correct because the CAFC had preemption in mind, used evidence as a guide, and construed Alice Corp narrowly

Joachim Martillo

April 19, 2018 05:38 amHere’s the rest of the comment.

In the above DDR Holdings’ claims, a web page was just calculated/generated at a server from some (browser) terminal inputs (something quite trivial by use of common automatic web page generation systems) and displayed on a (browser) terminal. The server queried a database that was also located within the NORMA distributed computer system. These claims are at least as directed to an abstract idea as those of Benson as was decided in Gottschalk v. Benson.

Note that as a PHOSITA using the ‘399 patent as a guide, I would first implement the DDR Holdings method of Claim 1 on a Linux system by installing the mongodb database server thereon along with the node.js server-side scripting system. I would then install one of the node.js mongodb interface packages along with the node.js express server framework package. Then I would simply code the described DDR holdings server. The whole server is unlikely to exceed more than a few hundred lines. I could test the whole system by means of a Chrome, Mozilla, or Safari browser installed on the Linux system. Voilà I have implemented the method of Claim 1 in three local programs (my server script+off-the-shelf browser+mongodb server) on my Linux system. Because I implemented the server by means of node.js, the server script could be run on any computer system that hosts node.js and implements WWW protocols. The server script could access a mongodb server on any computer system that implements WWW protocols. In other words, I just implemented a system that implements the method of Claim 1. As far as I can tell, the USPTO and the CAFC have allowed patent claims whose inputs and outputs are purely within a single (distributed) multiprocessor computer system and that don’t in anyway represent an improvement to the WWW viewed as a vast NORMA distributed computer system or metacomputer. All of the ‘399 independent claims should be ineligible under § 101 even though the CAFC incorrectly decided otherwise.

Note the USPTO abstract idea guidance might provide ways to formulate Web-related claims that would be eligible under § 101. The desktop/laptop has sort of a dual personality/identity/aspect. If the user terminates the browser, the user’s computer is no longer part of vast NORMA distributed computer system (or metacomputer).

If the browser uploaded a file from the laptop/desktop to a web server that analyzed it and generated a new/modified file that is saved to file storage on the user’s desktop/laptop computer and that could be reviewed by the user independent of the World Wide Web (browser) and of the vast NORMA distributed computer system that the WWW forms, a claim could be written that avoided § 101 ineligibility.

I have also seen robotic systems that are controlled (or at least configured) via the Web. Such systems would probably be § 101 eligible according to the precedent of Diamond v. Diehr, 450 U.S. 175 (1981).

BTW, the logic that renders the ‘399 patent invalid under § 101 should also apply to the Google Panda patent (US 8682892) and similar patents. The USPTO needs to seriously reevaluate § 101 eligibility within the context of NORMA distributed systems, and judges in the district court system as well as those on the CAFC need to be brought up to speed with respect to modern distributed computing concepts before the patent system is inundated by a new flood of trash patents.

Joachim Martillo

April 19, 2018 05:36 amFor some reason I am unable to post the DDR Holdings Claim 1 in this comment.

You can see it here.

Joachim Martillo

April 19, 2018 05:20 amDDR Holdings seems hopelessly out of date on its understanding of computer science and software engineering.

From some notes on 101-eligibility. (For active hyperlinks use the preceding weblink.

In DDR Holdings, the CAFC seems to have been distracted by the networking protocols.

The World Wide Web/Internet is a vast giant NORMA (defined below) distributed multiprocessor system or metacomputer.

Today practically all computer systems are multiprocessor systems because almost all the major CPU chips are available in multicore versions that contain multiple processor cores that run in parallel. (It has been a long time since I have actually worked with a single core single processor system although I know one could still design such a system with available microprocessor chips.)

Generally multiprocessor systems fit into three categories:

UMA (Uniform Memory Access) – all processors see memory in exactly the same way,

NUMA (Non-Uniform Memory Access) – each processor can access its local memory quickly and can access remote memory more slowly. Local memory to one processor is remote memory to a separate processor,

NORMA (No Remote Memory Access) – each processor accesses its local memory quickly and cannot access remote memory directly. Instead remote memory is typically accessed by message exchange (over some sort of network medium – like the Internet). In the past such systems have generally been IP based distributed systems that use RPC (remote procedure call) libraries to access resources. (Of course, in the past IBM, DEC, Prime, et alia all had their own proprietary distributed systems based on proprietary networking protocols.)

Various open and proprietary software packages are available as a means to run some number of separate computer systems as a NORMA distributed system. Beowulf is an example of an open software system meant to create a NORMA distributed cluster from off the shelf computer systems. Mercury Systems, Inc. is an example of a company that provides a proprietary cluster distributed systems.

The latest distributed systems skip building RPC libraries and developing (or using) various sorts of resource location systems. Instead they piggy-back on WWW protocols and use extended versions of URLs (Uniform Resource Locators). SOAP (Simple Object Access Protocol) or REST (Representation State Transfer) or combinations thereof are generally used in implementing a WWW based NORMA distributed computing system.

Sometimes NORMA systems use a software layer to emulate NUMA systems.

When such software emulation is built into hardware logic, one typically refers to (NUMA) fabric interconnected systems. Starfabric provides this capability. The DOD likes it, but generally fabric-interconnect has been a solution in search of a problem.

If the CAFC judges that adjudicated DDR Holdings understood the WWW as computer scientists (like me) do, the claims of the DDR Holdings patents would have been invalidated by the precedent of Gottschalk v. Benson, 409 U.S. 63 (1972).

Below is the first independent claim of the ‘399 patent. As long as the desktop or laptop computer is running the browser, it is part of the vast NORMA distributed computer system that is the WWW.

B

April 19, 2018 12:48 am“What exactly is the Patent Office supposed to do? Tell patent examiners to ignore the courts?”

Remember KSR? The PTO guidelines got that case right immediately, while it took the CAFC seven years to get it right in K/S HIMPP v. Hear Wear, followed by Arendi v Apple.

Meanwhile, Judge Lynn seems like the sole voice of sanity in addressing the CAFC’s s101 inconsistencies.

BTW, DDR Holdings got it perfectly right imho.

B

April 19, 2018 12:40 amAll that’s required at the PTO to make a successful 101 rejection: declare anything and everything abstract and anything else conventional; berate the invention; and cite Ariosa. 3/4-plus cases the PTAB will affirm. This is Star Chamber patent prosecution that God and Giles Rich can’t fight. Two years ago the CAFC would berate you for demanding evidence, addressing the claims as a whole, etc. on appeal. The APA? Screw it!

I want 10% of those 14,000 s101 rejections taken to the CAFC. Do the math given the CAFC handles about 80 cases per month. Then consider the PTAB backlog won’t decrease until the CAFC and Bahr get their acts together.

The good news: there’s likely help coming in two months. Oh, it won’t be perfect, but it will be a step in the right direction.

B

April 19, 2018 12:14 am“Why don’t you take a stab at writing a memo that describes the state of 101 that reconciles all Supreme Court and Federal Circuit decisions.”

I personally do not think all CAFC cases can be reconciled. This isn’t to say the CAFC did not get every case right, but the express holdings of some cases are idiotic.

Take for example McRo v Bandai. The only difference with those claims and the prior art was pre-weighting of phonemes. Now, isn’t that just math? And isn’t math an abstract? And don’t two abstracts still result in an abstract as we we’re told in Recognicorp? Ah, and was not Judge Stoll on both panels?

Don’t even get me started on Ariosa, where Reyna decoupled the sole reason justifying s101 exceptions, i. e., preemption, from the Alice/Mayo test.

As to Bahr, he’s had one job since 2014 – explaining 101. The PTO’s eligibility guidelines are beyond pathetic, and none of the hundreds of examiners I’ve questioned can make heads or tails of the guidelines.

With this in mind, there’s a reason there are nearly 14,000 s101 rejections in the PTAB queue, and I personally have over a dozen 101 appeals in that queue.

If I am a tiny bit hard on Bahr, it’s becase he deserves it.

Gene Quinn

April 18, 2018 11:33 pmB-

Why don’t you take a stab at writing a memo that describes the state of 101 that reconciles all Supreme Court and Federal Circuit decisions.

Not really sure why there is so much hatred for Bob Bahr. It is as if suddenly all the Bahr haters have suddenly forgotten that it is impossible to reconcile even just the Supreme Court 101 decisions let alone the Federal Circuit decisions.

What exactly is the Patent Office supposed to do? Tell patent examiners to ignore the courts?

B

April 18, 2018 10:40 pm“The PTO really doesn’t have that much control, though they can fix their 3600 problem and make IPRs more fair.”

The PTO has a lot of control, but their 101 braintrust cannot be fixed.

B

April 18, 2018 10:37 pm101 is an issue “we must all address”

Start by firing Robert Bahr. Seriously. Then burn all evidence of his writings covering 101. Then fire every examiner and APJ who refers to Bahr’s 101 guidelines.

Joachim Martillo

April 18, 2018 06:08 pmIn other words an interface is program code found in a .java file that can be compiled to .class file. The .class files can be packaged in a Java ARchive (JAR), which may have api as a suffix.

A C/C++ API is quite different from an Java api JAR. Yet because the C/C++ understanding of API is in a way more conventional and understood (at least for the previous generation of programmers and coders), Google almost got away with outrageous copyright infringement.

Joachim Martillo

April 18, 2018 06:07 pmAnon@1,

Conventionality and conventional understanding, which are important aspects of determining patent eligibility, are often extremely context dependent.

We just saw the importance of context in the recent Google Oracle Java interface copyright controversy.

An Application Programming Interface (API) in C/C++ is not code but is for the most part a description of a selection of header files.

In Java header files don’t exist.

In The Java™ Tutorials explains interface as follows.

Night Writer

April 18, 2018 04:43 pmOf course the problem is the SCOTUS and the CAFC (as well as the AIA.)

The PTO really doesn’t have that much control, though they can fix their 3600 problem and make IPRs more fair.

Ternary

April 18, 2018 04:28 pmInstruction No.1 to Examiners Corps. If you feel you must issue a 101 rejection over Alice, you are required to suggest what amendment(s) you deem to be acceptable to overcome the rejection.

angry dude

April 18, 2018 02:16 pmaddress Ebay, talking heads

Anon

April 18, 2018 01:21 pmAs to 101 and “told the Senate Judiciary Committee. “We are considering ways to increase the certainty and predictability of the eligibility analysis.”

Start with the already existing USPTO requirements vis a vis Official Notice (not allowing any such unsubstantiated comments in the area of “state of the art”), and then realize that it is up to the examiner to provide facts and proper evidence to support any assertion of “conventionality” in the 101 context.

Also realize that this level of evidentiary support for conventionality is MORE than what is required for what examiners usually supply for novelty (102) or obviousness (103) rejections.

Something merely being known (or existing) is NOT the same as something being so widely adopted as to make the item “conventional.”

These are things that Director Iancu can immediately implement and require examiners to provide whenever an examiner attempts to make a 101 rejection.

Lacking this, it is clear (and should be clear), that the Office attempt at providing a prima facie case lacks critical support and cannot stand (thus, such attempts are negated with an applicant merely pointing out the deficiency, and the applicant is not required to do more to overcome the rejection).

I even give Director Iancu permission to use my words in his letter to all examiners clarifying the existing duty of examiners.